Tous droits réservés pour tous pays. All rights reserved.

| Serveur © IRCAM - CENTRE POMPIDOU 1996-2005. Tous droits réservés pour tous pays. All rights reserved. |

Composers have had essentially one medium through which to express their musical ideas in a form an audience can appreciate : the sounds that musicians can elicit from traditional instruments. With the advent of computers and other equipment for processing digital signals an entirely new means of musical expression has become available. A composer who applies these electronic devices is bounded only by imagination in creating an "orchestra" of sounds.

Music that seeks to integrate computer-generated sounds with traditional instruments presents a great challenge to a composer. Not only must the composer express musical ideas convincingly but also he or she must do so in a manner that is readily translatable into both mediums. Moreover, the ideas must be resilient enough to be passed back and forth between the two mediums during the course of a performance. Otherwise the listener might wonder what role the computer was meant to have in relation to the other instruments and be puzzled (and perhaps even repelled) by the lack of coherence.

Exploring possible musical relations between computers and traditional instruments requires much communication between composers and those who design computer hardware and software. Through such collaboration electronic devices can be constructed that serve the composer's immediate purpose while preserving enough generality and flexibility for future musical exploration -- a task complicated by the fact that the composition's musical complexity is usually not commensurate with the technical complexity needed for its realization. What appears to be a simple musical problem often defies an easy technological solution. Perhaps for the first time in history a composer has to explain and formalize the way he or she develops and manipulates concepts, themes and relations in a musical context in order for technicians (who may have little musical training) to bring them into existence. These are the kinds of problems we confront at the Institut de Recherche et Coordination Acoustique / Musique (IRCAM). The institute, part of the Centre Georges-Pompidou in Paris, is dedicated to musical and scientific research for the integration of the traditional instrumental medium with the new medium afforded by computers.

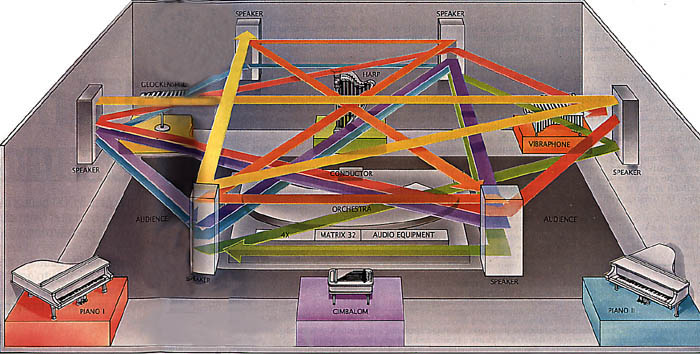

"Spatialization" of the sounds produced by six instrumental soloists at their simultaneous entrance in Répons, a composition by one of the authors (Boulez), involves circulating each sound among four speakers in a pattern shown by an arrow of a color corresponding to that of the soloist's instrument. The speed with which a sound moves around the performance hall depends directly on the loudness of the sound. Because the sounds of the instruments die out at different rates, the sounds slow down at different rates. Several technicians seated at a panel just behind the orchestral ensemble control the various electronic and audio devices that make such an effect possible.

The relation between the two mediums can be explored along several different lines. One line of study seeks to model how common instruments produce their characteristic sounds, so that the models can then be applied to synthesize a palette of sounds closely or distantly, related to the instrumental sounds [see "The Computer as a Musical Instrument," by Max V. Mathews and John R. Pierce ; SCIENTIFIC AMERICAN, February. 1987]. The aim is to make it possible for a composer to score music for a computer as though it were a traditional instrument, specifying the kinds of sounds a computer operator is to elicit and when and how they are to be produced. Another line of study searches for ways in which the sound of traditional instruments can be modified. Applying this approach, the music-making capabilities of an entire ensemble can be extended beyond human or instrumental limits in one stroke.

Making a sound with a computer requires generating a sequence of binary numbers, called samples, that describes the sound's waveform : the air-pressure fluctuations of the sound as a function of time. The samples can then be made audible by converting them into a sequence of proportional voltages, "smoothing" and amplifying the stream of discrete voltages and sending the electrical signal to a loudspeaker. The number of samples the computer must generate for each second of sound, called the sampling rate, depends on the highest component frequency of the sound's air-pressure fluctuations. In particular, the sampling rate must be twice the highest component frequency. This, for most purposes, means that if a computer is to synthesize or transform a sound, it must be capable of generating or manipulating in one second between 16,000 and 40,000 samples, each of which can require a number of calculations.

In the past such processing of sounds could be done only rather slowly and painstakingly on a computer. Hence a composer wanting to make use of computer-processed sounds in conjunction with sounds produced by "live" instrumentalists had to first record the processed sounds on tape so that they, could later be played back during a performance with the instrumentalists. But a tape recorder lacks the suppleness in timing that is so crucial in live concerts. Give and take with the tempo of a piece is one of the basic features of music. Moreover, prerecorded material may also disappoint people who enjoy seeing musicians playing their instruments on stage.

Today computers are fast and powerful enough to synthesize original sounds or transform instrumental sounds in "real time" : in step with the instrumentalists. Composers can now blend the role of the computer with that of the other instruments much more easily and thereby demolish the rather artificial barrier that had often existed between the two types of instruments.

The real-time transformation of instrumental sounds is particularly interesting for several reasons. Altering the sound of traditional instruments after they have been produced by musicians enables a composer to explore unfamiliar musical territory even while scoring for the instruments with which both he and the audience are familiar. The contrast between the familiar and the unfamiliar can thus be readily studied by creating close and distant relations between the scored instrumental passages and their computer-mediated transformation. In addition, since the transformations are done instantaneously, they capture all the spontaneity of public performance (along with its human imperfections).

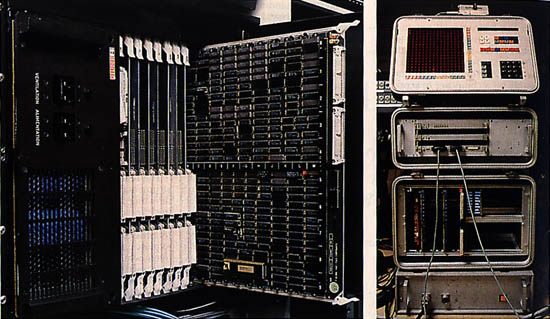

Electronic devices that have integral roles in performances of Répons include the 4X (left) and the Matrix 32 (right). The 4X consists of eight processor boards each of which can be independently programmed to store, manipulate and recall digitized sound waveforms : sequences of numbers that correspond to the air-pressure fluctuations of a sound. The Matrix 32 is basically a programmable audio-signal traffic controller, routing audio signals from the soloists to the 4X and from the 4X to the speakers.

The equipment for electronically modifying sound in real time has only recently been developed in transportable form, allowing it to be brought into the concert hall. One such device, the 4X, is the fourth generation in a series of real-time digital-signal processors used at IRCAM not only for transforming sounds but also for analyzing and synthesizing them. The prototype was designed and built at IRCAM in 1980 by Giuseppe Di Giugno with assistance from Michel Antin, and the final version was manufactured in 1984 by the French company SOGITEC.

Capable of up to 200 million operations per second, the 4X is made up of eight processor boards, each of which can be independently programmed with any combination of methods for processing digital signals. In a technique known as additive synthesis, for example, musical sounds are generated by adding large numbers of sinusoidal waveforms. Each board in the 4X is capable of generating 129 such waveforms. Each board can also be programmed to have as many as 128 different filters, which can be applied to transform sounds in real time. A processor board also has its own so-called wave-table memory, which allows it to store as much as four seconds of sound and then to play back the sound in any rhythmic pattern.

The basic operations needed to manipulate the digital s are coded in so-called modules, or self-contained subprograms, that can be interconnected so that the output from one module is the input for another. The modules themselves as well as the connections between them are programmed by means of "patches" : higher-level programs written in a computer language designed by one of us (Gerzso) and implemented by Patrick Potacsek and Emmanuel Favreau. (The terms "patch" and "module" are holdovers from the days of analog sound synthesizers, which had actual patch cords to interconnect tangible oscillator, amplifier and filter modules.)

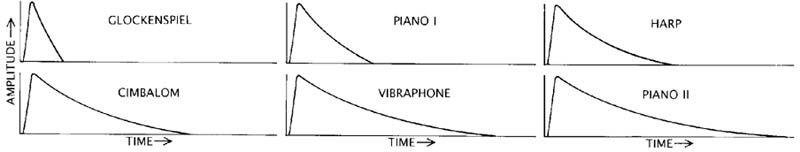

Waveform Envelopes of sound reflect the way the amplitude, or loudness, of the sound varies with time. The envelopes produced by each of six solo instruments in Répons are similar in shape, displaying a steep attack, or beginning, followed by a gentler decay, or end. The duration of a decay depends on both the pitch of the sound and the instrument on which it is played.

Individual patches are stored on a magnetic disk of the 4X's "host" machine and can be loaded into the 4X in half a second or less. The host machine also runs a real-time operating system and event scheduler (developed by Miller Puckette, Michel Fingerhut and Robert Rowe), which tells the 4X what program to run and when. Hence during a musical performance a number of different patches, each of which essentially "rebuilds" the 4X in a fraction of a second, can be loaded on cue. Music demands this kind of flexibility.

Musical performances in a large auditorium also demand flexibility in switching and mixing sounds among speakers. This is the function of another piece of electronic equipment developed at IRCAM : the Matrix 32. The device, designed and built by Michel Starkier and Didier Roncin, serves as a kind of audio-signal traffic controller : it establishes connections between a set of inputs (the signals coming from microphones or the 4X) and a set of ouputs (signals going to the 4X or the speakers) and also specifies the level of the output signals. By means of software written by one of us (Gerzso), it can at any given moment be programmed to route a soloist's sound to, a particular speaker for amplification. At another moment it might route the sound of each of several soloists to different module inputs in the 4X and sends the transformed sounds to different speakers. The Matrix 32 can be reconfigured in about a tenth of a second.

Both the 4X and the Matrix 32 have integral roles in Répons, a composition by one of us (Boulez) for six instrumental soloists, chamber orchestra and real-time digital-signal processors. The work was commissioned by the Southwest German Radio and premiered in Donaueschingen, West Germany, in 1981. It was most recently performed in 1986 as part of the program in a five-city U.S. tour by the Ensemble InterContemporain, a french avant-garde chamber-music group.

"Répons" is a medieval French term for a specific type of antiphonal choral music : a compositional form in which a soloist's singing is always answered by that of a choir. The term is an apposite name for the contemporary composition, since it explores calls and responses on many different musical levels. In Répons one can find all kinds of dialogues : between the soloists and the ensemble, between a single soloist and the other soloists, and between transformed and untransformed passages. Almost all other aspects of music are also involved in such back-and-forth interplay : pitch (the perceived frequency of a tone), rhythm (the pattern and timing of beats), dynamics (the loudness of a tone) and timbre (the characteristic tonal quality of an instrument's sound). Real-time transformations of the solo instruments' sounds are necessary to bring about many of these oppositions. (In order to make the transformations possible all the solo instruments are equipped with microphones. In this way electrical signal analogues of their sounds are instantly available to be processed and sent on to speakers.) The traditional antiphonal form of composition also suggests two further ideas that were incorporated into Répons. The first is the notion of displacing the music in space, since the soloist and the choir are in different physical locations. Building on that notion, the six soloists in a typical performance of tons are positioned at the periphery of the concert hall (as are six loudspeakers), whereas the instrumental ensemble is placed in the center. (The audience surrounds the ensemble.)

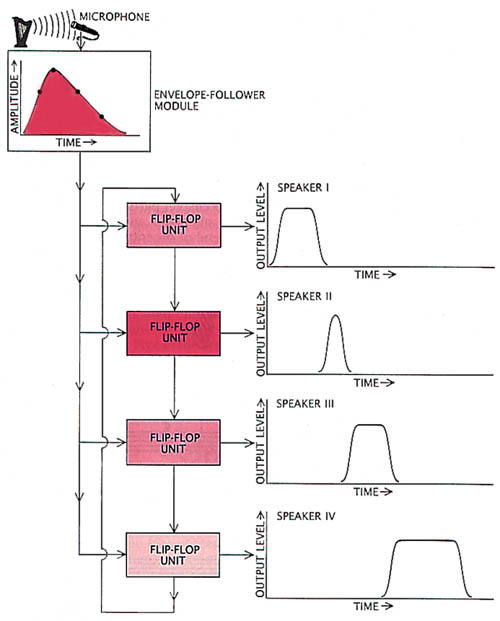

4X Spatialization Program takes the sound that is picked up by a microphone and directs an envelope-follower module, or subprogram, to generate a timing signal (color) whose frequency (indicated by the intensity of color) changes in step with changes in the amplitude of the sound's waveform envelope. The timing signal in turn serves as input for four other modules called flip-flop units (FFU's), which control the level of the sound broadcast from each of four speakers. The signal passes from one FFU to the next as one is switched off and the next is switched on. (Only one FFU can be on at any given time.) The signal's frequency determines how long a particular FFU remains on ; the higher the frequency is, the sooner the FFU is switched off. In this illustration the timing-signal frequency corresponding to the first point on the envelope controls how long the first FFU is on, the frequency corresponding to the second point on the envelope controls how long the second FFU is on, and so on. By arranging the FFU's in a loop the sound can be made to circulate repeatedly from speaker to speaker at a speed determined by its amplitude. Because the sound level takes some time to rise to its maximum level and to fall back to zero, there is a bit of overlap among the speakers.

Displacement can also be understood in more general terms as a shift along any dimension. If one considers frequency as a dimension by which to characterize musical sounds, then a displacement would amount to shifting frequencies-similar to the common musical device of transposing a melody into different keys. By the same token a displacement in time amounts to a delay, since it involves shifting notes into the future.

Another idea that one can draw from antiphonal music is related to the fact that in such music one voice (the soloist) is answered by many voices (the choir). This suggests the notion of multiplication and proliferation of sounds, which can be realized by means of computer processing techniques that take a single note or chord and create a multitude of notes or chords all of which are related to the original one.

Répons opens with a seven-minute movement played only by the instrumental ensemble in which the musical tension slowly builds up for the entrance of the soloists, whose instruments are a cimbalom (a wirestrung instrument whose strings are struck with hand-held, padded hammers), a xylophone, a glockenspiel, a vibraphone (a xylophonelike instrument), a harp, a Yamaha DX 7 keyboard synthesizer and a pair of pianos. (Although there are six soloists, there are eight instruments : one soloist plays both the xylophone and the glockenspiel parts and another doubles on piano and synthesizer.) At the end of the introduction the soloists make a dramatic entry. Each soloist plays in unison with the others a different short arpeggio : a chord whose component notes are sounded in sequence from the lowest pitch to the highest. The resonance of the arpeggios then rings throughout the hall for about eight seconds, until the sound has virtually died out. During this flourish the 4X and the Matrix 32 are brought into action for the first time : they take the sounds of the chords the soloists have built note by note on their instruments and shift them from speaker to speaker.

The attention of the audience is thereby suddenly turned away from the center of the hall to the perimeter, where the soloists and the speakers are. The audience hears the soloist's sounds traveling around the hall without being able to distinguish the paths followed by the individual sounds. The overall effect highlights the antiphonal relation between the central group and the soloists by making the audience aware of the spatial dimensions that separate the ensemble from the soloists and that separate the individual soloists as well. Indeed, we say the maneuver has "spatialized" the sound.

A soloist's sound does not move at a fixed speed from speaker to speaker ; the speed depends directly on the sound's loudness, which at any given time is proportional to the amplitude of the envelope, or contour, of the sound's waveform. The larger the amplitude is, the faster the sound will appear to move. Although the soloists' instruments produce envelopes that are similar in shape (displaying a very sharp attack followed by an exponentially decreasing decay), the duration of an envelope's decay depends on the pitch of the notes as well as the instrument on which they are played. For example, high notes on a piano have steeper attacks and shorter decays than its low notes, and a note sounded on a glockenspiel has a steeper attack and a shorter decay than the same note played on a piano.

Because the sounds of the soloists' instruments die out at different rates, the sounds also slow down at different rates. The overall impression for the listener is that of a single spectacular gesture slowly breaking up into several parts. Furthermore, as the overall amplitude decreases, the original impression of sounds moving rapidly around the hall is replaced by a sense of immobility.

The amplitude dependent spatialization is achieved by increasing the sound level of a particular solo instrument to a maximum at one speaker while reducing the instrument's sound level to zero at another. So-called flip-flop modules in the 4X control the simultaneous turning up and turning down of the sound levels and also determine the length of time the maximum level is maintained at a given speaker [see illustration]. Since the sound of each soloist circulates in a pattern among four speakers, the flip-flop units are arranged in loops containing four units. A flip-flop module operates on a timing signal whose frequency changes in proportion to changes in the amplitude of the sound's waveform envelope, which is continuously traced out by an "envelope follower" module. Hence drops in the envelope's amplitude (as during a sound's decay) lower the timing frequency and thereby cause a flip-flop module to hold the maximum sound level longer at a speaker before the next flip-flop module shifts the sound to the next speaker.

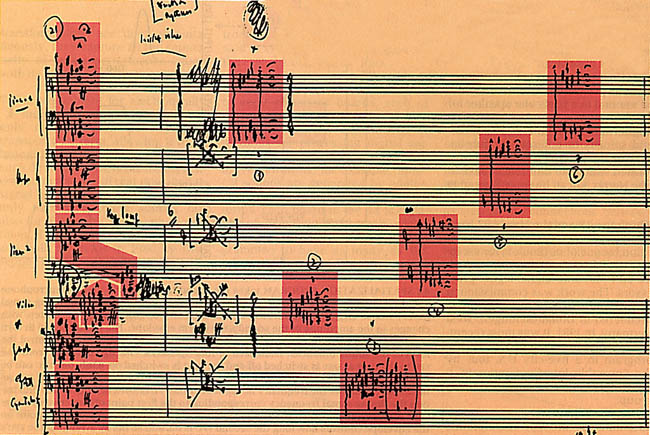

Excerpt from original score of Répons shows chords (highlighted in red) that six soloists play as arpeggios, sounding each note sequentially from bottom to top. The soloists play the first set of arpeggios together and the second set separately.

A soon as the sounds of the spatialized arpeggios have died out sufficiently, the conductor at roughly equal intervals cues each soloist to play another arpeggiated chord, answering the simultaneous arpeggios with separate ones. Five of the arpeggiated chords are routed to the 4X, which continuously writes, or stores, the sounds in its wave-table memory. Immediately after the 4X writes the sound data it continuously recalls them with 14 "read" modules, so that 14 exact copies of the original sound are produced, each of which has a different time delay. Each copy is then shifted in frequency by another module in the 4X and played back.

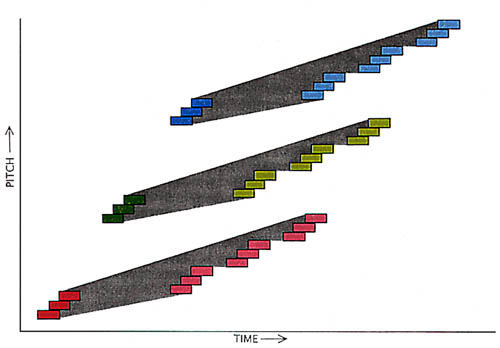

The example just described is essentially an arpeggio (the spreading out of 14 copies in time and frequency) of an arpeggio (the sequentially played notes of a chord) of an arpeggio (the individually cued soloists). By means of the delays and frequency shifting, the idea of an arpeggio-displacement of musical entities in time and pitch has been effectively translated from instrumental to electronic composition.

There is also a method behind the shifting of pitches in Répons, regardless of whether it is specified in the instrumental composition or the electronic composition. Much of the writing in the piece can be seen as a series of chord derivations based on shifting notes up or down in pitch by various intervals. Without going into too much detail, we can simply say that much of the harmonic material in Répons can be traced to five chords, which are heard in the first bar of the piece. In fact, the six arpeggiated chords played simultaneously by the soloists at their entrance, as well as the ones later played separately, are all derived from the same basic chord. The chords of the soloists' entrance are constructed by transposing the basic chord a half step up and a half step down and then putting pieces of the resulting two chords together in different ways. (A half step is the smallest unit of transposition possible in traditional Western music. Transposing a chord 12 half steps results in the same chord but an octave higher or lower.) The derived chords are also shifted up or down an octave or two so that they are played in different octave ranges on the different solo instruments.

The separately played arpeggios, on the other hand, are obtained by successively transposing the basic chord up an amount equal to the number of half steps between the topmost note of the chord and each of the other notes constituting the chord. In addition the notes of the resulting chords are adjusted up or down an octave to fall between the lowest and the highest note of the basic chord. The procedure in essence "rotates" the chord by folding those notes that exceed the pitch limits back into the chord.

Arpeggio of an Arpeggio of an Arpeggio similar to the one found in Répons is depicted schematically on a graph that has time and pitch as axes. Because an arpeggio can be broadly considered as the displacement in time and pitch of any set of musical entities (not just the notes of a chord), three arpeggiated three-note chords (dark-color boxes) played at different times and in different pitch ranges by instrumentalists can in themselves be thought of as constituting an "arpeggio." By the same token, the spreading out in time and frequency of three computer-generated copies (light-color boxes) of each of the arpeggiated chords is also an "arpeggio." In Répons five soloists play seven note arpeggiated chords that are copied, frequency-shifted and played back 14 times.

The frequency shifts of the 14 copies of the separately played arpeggios also conform to the same general pitch-shifting pattern. Each input chord is transformed in such a way that the pitches of its notes remain the same, although in a different octave. The frequency shifting thereby reinforces the original chord while at the same time giving it a new harmonic quality. Because one basic chord is shifted to create a new set of chords, which in turn are shifted in frequency by the 4X, the net result can be thought of as a transposition of a transposition. The underlying idea linking the instrumental writing and the computer writing in this case is displacement along the frequency dimension.

Transposition by a frequency-shifter module of the 4X is not entirely equivalent to the ordinary transposition of chords, however. The module does not preserve the tonal relations between a tone's partials, or frequency components. Normally a tone has a pitch-determining partial called fundamental frequency and a number of other partials that are generally whole-number multiples of the fundamental frequency. The wholenumber frequency ratios of a tone's partials as well as the partials' relative amplitudes, which vary while a tone is played, determine the tone's timbre. Hence in shifting each partial of a tone by the same fixed amount, the ratios between the partials are not preserved and the timbre of the original tone is altered as well. This problem could be overcome if one could shift the frequency of each partial independently by an arbitrary amount. Of course, in order to do this more powerful real-time analysis and control techniques need to be linked together. This is an area in which we are currently working at IRCAM.

Transpositions, or shifts in pitch, of five basic chords (on gray staff) constitute much of the harmonic material of Répons. The chords that are played by the soloists at their entrance are all derived from one basic chord (in box). The boxed chord is the same as the one played by the vibraphonist. Shifting the chord up two octaves and a half step and down two octaves and a half step yields the chords played respectively on the glockenspiel and the second piano. The chords for the other soloists are constructed by combining the top and bottom parts of the three chords and shifting the pitches up or down an octave so that each chord's notes fall within two octaves and a half step.

The computer, although a relative newcomer to music, has already opened intriguing perspectives from which composers and sound designers can explore new ideas or novel juxtapositions of old ideas. To do so they need powerful devices that can be programmed in a number of ways. No composer or sound designer can be satisfied with a device that allows the study of only one method for analyzing, synthesizing or transforming sound.

For example, the electronic manipulations involved in the two short passages from Répons we have described were implemented by means of a single 4X patch that programs six modules for spatialization, five for multiple delays, 30 for frequency shifting and assorted noise-reduction modules for each soloist. Yet both passages combined account for only about 30 seconds of a work that lasts for almost 45 minutes, during which some 50 other patches have to be loaded on cue. Clearly machines of extraordinary flexibility are necessary for performing mixed-medium pieces such as Répons in concert.

Unfortunately the recent trend has been toward the manufacture of specialized devices, each of which has its own method for processing digital signals. This is partially the result of marketing constraints, which demand that the devices be relatively cheap. Yet trying to link several electronic devices inevitably results in problems of control and coordination. Moreover, only a fraction of the total computing power can be applied at any given time. In addition to being wasteful, this arrangement makes it impossible to muster the total combined computational power into one method for processing digital signals. It is IRCAM'S goal to help composers, sound designers and electrical engineers solve these problems without losing sight of the music.

Interested readers can obtain a cassette tape containing short excerpts of several computer-music compositions, including Répons, by sending $8.50 to Départment Diffusion, IRCAM, 31, rue Saint-Merri, 75004 Paris, France.

____________________________

Server © IRCAM-CGP, 1996-2008 - file updated on .

____________________________

Serveur © IRCAM-CGP, 1996-2008 - document mis à jour le .