Tous droits réservés pour tous pays. All rights reserved.

| Serveur © IRCAM - CENTRE POMPIDOU 1996-2005. Tous droits réservés pour tous pays. All rights reserved. |

5th International Conference: Interface to Real & Virtual Worlds, Montpellier, France, Mai 1996

Copyright © Ircam - Centre Georges-Pompidou 1996

From an auditory point of view, the spatial cues to be reproduced can be divided into two categories: the auditory localization of sound sources (desirably in three dimensions), and the room effect resulting from indirect sound paths (reflections and reverberation from walls and obstacles). The benefits of spatial sound reproduction over mono reproduction are significant in a wide range of industrial and research or artistic and entertainment applications, including teleconferencing, simulation and virtual reality or telerobotics, professional audio and computer music, advanced machine interfaces for data representation or visually disabled users... Spatial sound processing is a key factor for improving the legibility and naturalness of a virtual scene, restoring the capacity of our perception to exploit spatial auditory cues in order to segregate sounds emanating from different directions [1-3]. It further allows manipulation of the spatial attributes of sound events for creative purposes or 'augmented reality' [3]. In all applications, the coherence of auditory cues with those conveyed by cognition and other modes of perception (visual, haptic...), or the absence of these cues, must be considered or taken into account.

Spatial sound reproduction requires the use of an electro-acoustic apparatus

(headphones or loudspeaker system), along with a technique or format for

encoding directional localization cues on several audio channels for

transmission or storage. This encoding can be achieved in several ways:

a) Recording of an existing sound scene with coincident or closely-spaced

microphones (stereo microphone pair, dummy head, Ambisonic microphone [4])

allowing simultaneous reproduction of several sound sources and their relative

positions in space. This approach of course considerably limits the

possibilities of future spatial manipulations and adaptation to various

reproduction contexts or interactive applications.

b) Synthesis of a virtual sound scene: a signal processing system reconstructs

the localization of each sound source and the room effect, starting from

individual sound signals and parameters describing the sound scene (position,

orientation, directivity of each source and acoustic characterization of the

room or space). An example of this approach, in the field of professional

audio, is multitrack recording and post-processing using a stereo mixing

console and artificial reverberators.

c) A combination of approaches (a) and (b), such as a live multitrack recording

in which a stereo signal, captured by a main microphone pair, is mixed with

signals captured by several spot microphones located near the individual

instruments.

In the context of interactive applications, where elements of the sound scene can be dynamicly modified by the user's or performer's actions (e. g. movements of sound sources), a real-time spatial synthesis technique is necessary. This requires local signal processing hardware in each display interface (videogame console, teleconference interface, concert sound system...) and involves a processing and transmission cost which increases linearly with the number of sound events to be synthesized simultaneously.

From a general point of view, the spatial synthesis parameters can be provided either by the analysis of an existing scene (through position trackers, cameras, adaptative acoustic arrays...), or by the user's actions (man-to-machine interface: mixing desk, graphic or gestual interfaces...), or even by a stand-alone process (videogames, simulators). For instance, whenever a head-tracking system is used for updating a synthetic image on a head-mounted display according to the movements of the spectator, the position coordinates it provides can be exploited simultaneously for updating the synthetic sound scene reproduced over headphones (this is necessary, in headphone reprduction, for ensuring that the perceived positions or movements of sound sources in the virtual space are independent from the movements of the listener).

In the next section of this paper, the general principles and limitations of current spatial sound processing and room simulation technologies will be briefly reviewed. In the third section, a perceptually-based processor (the Spatialisateur) will be introduced. In conclusion, the advantages of this approach will be illustrated in practical applications.

In a natural situation, directional localization cues (azimuth and elevation of

the sound source) are typically conveyed by the direct sound path from the

source to the listener. However, the intensity of this direct sound is not a

reliable distance cue in the absence of a room effect, especially in an

electro-acoustically reproduced sound scene [1, 2]. Thus the typical mixing

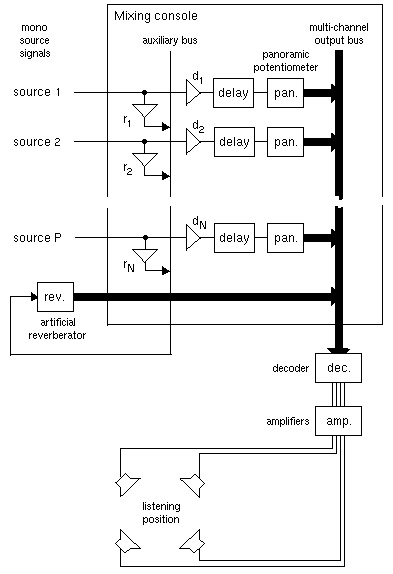

structure shown on Figure 1 defines the minimum signal processing system for

conveying three-dimensional localization cues simultaneously for N sound

sources over P loudspeakers.

Each channel of the mixing console receives a mono recorded signal (devoid of

room effect) from an individual sound source, and contains a panning module

which synthesizes directional localization over the P loudspeakers (this

module is usually called a panoramic potentiometer, or 'panpot', in stereo

mixing consoles). All source signals feed an artificial reverberator which

delivers different output signals to the loudspeakers, creating a diffuse

(non-localized) room effect to which each sound source can contribute a

different intensity. The relative values of the gains d and r can

be used in each channel for controlling the perceived distance of the

corresponding sound source. This basic principle of current mixing

architectures, generally designed for two output channels, can be extended to

any number of loudspeakers in two or three dimensions, by designing an

appropriate 'panpot'. This extension was proposed by Chowning [5], who designed

a spatial processing system for computer music, allowing bidimensional control

of the localization and movements of virtual sound sources over four

loudspeakers (including the simulation of the Doppler effect). The localization

was controlled in polar coordinates (azimuth and distance) referenced to a

listener placed at the center of the loudspeaker system, using a gestual

control interface.

Figure 1: Typical mixing architecture (shown here assuming

4-channel loudspeaker reproduction) combining a mixing console for synthesizing

directional effects and an external reverberation unit for synthesizing

temporal effects (recent digital mixing consoles include a tunable delay line

in each channel). With some spatial encoding methods, an additional decoding

stage is necessary for delivering the processed signal to the loudspeakers.

Many systems used today in computer music are based on Chowning's process. For the directional encoding of the direct sound over P loudspeakers, Chowning used a pairwise intensity panning principle (sometimes called 'discrete surround'), derived from the conventional stereo panpot. Alternatively, it is always possible to design a panning module which emulates the encoding achieved in free field by any spatial sound pickup technique using several microphones. An attractive approach is the three-dimensional 4-channel Ambisonic 'B-format', for which decoders have been designed to accomodate various multi-channel loudspeaker setups [4]. However, there is currently no multi-channel reproduction technique allowing accurate 3D reproduction over a wide listening area, while using a reasonable number of loudspeakers. Current techniques either assume a centrally located listener (such as in Ambisonics) or assume a frontal bias for primary sound sources in the reproduced scene. The latter approach is found in formats initially developed for movie theaters, which have recently evolved into standards for HDTV, multimedia and domestic entertainment [6]. In these formats, the side or rear loudspeakers are not intended for reproducing lateral or rear sound sources, but only for diffuse ambiance and reverberation.

Binaural and transaural processors

Another attractive approach for designing a three-dimensional panning module is to emulate a dummy-head microphone recording. This method could be expected to provide exact reproduction over headphones, since it directly synthesizes the pressure signals at the two ears [1]. The panning module can be implemented in the digital domain as shown in Figure 2. Using a dummy-head and a louspeaker in an anechoic room, a set of 'head-related transfer functions' (HRTFs) can be measured in order to simulate any particular direction of incidence of a sound wave in free field (i. e. without reflections) [1, 2].

Early binaural processors and binaural mixing consoles, developed in the late 80s [7], used powerful signal processors in order to accurately implement the HRTF filters in real time. Further research on the modeling of HRTFs has led to efficient implementation of time-varying HRTF filters allowing to simulate dynamic movements of sound sources [2, 8,9]. A dynamic implementation requires about twice the computational power necessary for a static implementation [9]. Thus, with off-the-shelf programmable digital signal processors such as the Motorola DSP56002, a straightforward implementation of a variable binaural panpot using 200-tap convolution filters would require the signal processing capacity of two DSPs at a sample rate of 48 kHz. This cost can be reduced to less than 150 multiply-accumulates per sample period (30% of the capacity of one DSP) using an implementation based on minimum-phase pole-zero filters and variable delay lines [9].

Figure 2: Principle of binaural synthesis, allowing to simulate a free-field listening situation over headphones. The azimuth and elevation of the virtual sound source are controlled by loading two sets of filter coefficients from a database of 'Head Related Tranfer Functions', according to a specified direction.

Because the HRTFs actually encode diffraction effects which depend on the shape of the head and pinnae, the applications of binaural technology in broadcasting and recording are limited by the individual nature of the HRTFs. To achieve perfect reproduction over headphones, it would be necessary, instead of using a HRTF database measured on a dummy head, to use measurements made specificly for each listener (with microphones inserted in his/her ears). A typical consequence of using non-individual HRTFs is the difficulty of reproducing virtual sound sources localized in the frontal sector over heaphones (these will often be heard above or behind, near or even inside the head) [2]. An additional constraint of headphone reproduction is that it calls for the use of a head-tracking system allowing real-time compensation of the listener's movements in the binaural synthesis process. Under this condition, in real-time interactive applications such as virtual reality, binaural synthesis offers an attractive solution because the dynamic localization cues conveyed by head-tracking largely compensate the ambiguities resulting from the use of non-individual HRTFs.

In order to preserve the three dimensional localization cues in reproduction over loudspeakers, a binaural signal must be decoded by a 2 x 2 inverse transfer-function matrix which cancels the cross-talk from each loudspeaker to the opposite ear [10]. This technique assumes a strong constraint on the position and orientation of the listener and loudspeakers during playback, which must be more strictly inforced than in conventional two-channel stereophony if a convincing reproduction of lateral or rear sound sources is desired. Nevertheless, in broadcasting and recording applications over two channels, transaural stereophony offers a viable solution for transgressing the limits of conventional stereophony. Our implementation generally produces reliable localization cues on a carefully installed loudspeaker pair, except in the rear sector. Current research toward improved transaural reproduction includes the implementation of head-tracking [11] or multichannel extensions involving least-squares inversion over multiple listening positions [12].

Real-time room simulation

The system proposed by Chowning, corresponding to the processing architecture of Figure 1, entailed some limitations. Although it was appropriate for conveying the impression that all virtual sources were situated in the same room, it did not allow to faithfully reproduce the perception of distance or direction of a sound source as experienced in a natural situation, because the temporal and directional distribution of early reflections could not be controlled specifically for each virtual sound source.

Early digital reverberation algorithms based on digital delay lines with feedback, following Schroeder's pioneering studies [13], evolved into more sophisticated designs during the 80's, allowing to shape the early reflection pattern and simulate the later diffuse reverberation more naturally [14, 18]. An artificial reverberation algorithm based on feedback delay networks, such as shown on Figure 3, can mimic the reverberation caracteristics of an existing room and deliver several channels of artificial reverberation, while using only a fraction of the processing capacity of a programmable digital signal processor such as the Motorola DSP56002 [19, 9].

When combining commercial reverberation units with a conventional mixing console, however, the musician or sound engineer still faces a non-ideal user interface: the perceived distance of sound sources cannot be controlled effectively using only the gain controls d and r in each channel of the mixing console, because it also depends on the settings of the reverberator's controls. This heterogeneity of the user interface limits the possibilities of continuous variation of the perceived distance of sound sources. Furthermore, in most current reverberation units, intuitive adjustements of the room effect are typically limited to the modification of the decay time or the size of a factory-preset virtual room, and the signal processing structure is usually not designed for reproduction formats other than conventional stereophony. These limitations make traditional mixing architectures inadequate for interactive and immersive applications, as well as broadcasting and production of recordings in new formats such as 3/2-stereo.

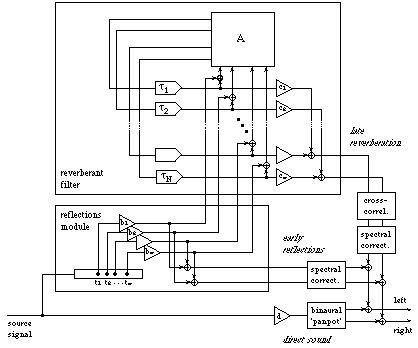

The minimum requirement for overcoming these limitations appears to be

providing not only a panpot in each channel of the mixing architecture, but

also a module allowing to control the distribution of the early reflections

specifically for each sound source, as shown on Figure 4 [19]. Moore [20]

described a signal processing structure of this type, allowing to control the

times and amplitudes of the first reflections, for each source signal and each

output channel, according to:

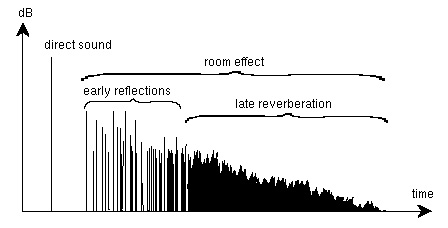

Figure 3: Typical echogram for a source and a receiver in a room

and cost-efficient real-time binaural room simulation algorithm using feedback

delay networks. The delay lengths ti and gains

bi allow controlling the time, amplitude and lateralization

of each early reflection. Each delay line [tau]i includes an

attenuation filter allowing accurate control of the reverberation decay time vs

frequency. The feedback matrix A is a unitary (energy preserving)

matrix. A typical implementation of this algorithm involves 8 feedback

channels.

The identification of indirect sound paths from each source to each virtual microphone is based on a geometrical model of sound propagation, assuming specular reflections of sound waves on the walls of the virtual room (image source model). The arrival time and frequency-dependent attenuation of each early reflection can be computed by simulating all physical phenomena along the corresponding path as a cascade of elementary filters: radiation by the source, propagation in the air, absorption by walls and capture by the microphone.

Figure 4: Improved mixing architecture allowing to reproduce several virtual sound sources located in the same virtual room while controlling the early reflection pattern associated to each individual source.

For application to headphone reproduction, Moore proposes reducing the size of the 'listening room' to the size of a head, and placing the two microphones on the sides. His directional encoding model then becomes equivalent to a rough implementation of HRTF filtering. From a signal processing point of view, this approach of room simulation is equivalent to using a digital mixing console as in Figure 1, where the same source signal is used in several channels of the console and each additional channel reproduces an early reflection. The delay and gain can be set in each channel to control the arrival time and amplitude of the reflection (as captured by an omnidirectional microphone placed at the center of the 'head' or 'listening room'). From this signal, the panning module then derives the P microphone signals to be delivered to the loudspeaker or headphones, according to the direction of incidence of the reflection, according to the principle of Figure 2 (which can be extended to more than 2 channels).

Very recently, systems performing binaural processing of both the direct sound and the early reflections in real time have been proposed, in which room reflections are computed according to the same physical and geometrical model as above [7, 8]. These systems involve a heavy real-time signal processing effort: a binaural panning module must be implemented for each early reflection and for each virtual sound source, which is impractical for most real-world applications. Fortunately, this signal processing cost can be further reduced by introducing perceptually relevant simplifications in the spectral and binaural processing of early reflections [9]. In addition to this signal processing task, a considerable processing effort is necessary for updating all parameters whenever a sound source or the listener moves [20, 8]. As Moore noted, the dynamic variation of the delay times of the direct sound and reflections will produce the expected Doppler effects naturally.

Another approach to real-time artificial reverberation was proposed recently, based on hybrid convolution in the time and frequency domain [21, 22]. Unlike earlier convolution algorithms, these hybrid algorithms allow to implement a very long convolution filter with no input-output delay for an affordable computational cost. Unlike reverberation algorithms based on feedback delay networks, convolution methods allow exact reproduction of reverberation derived from an impulse response measured in an existing room or derived from a computer model. However, it is impractical to dynamicly update the lengthy impulse response in a convolution processor in order to tune the artificial reverberation effect or simulate moving sound sources and Doppler effects. In most interactive applications, this global convolution approach must be restricted to the rendering of the late reverberation, which can be synthesized more efficiently by a feedback delay network such as shown on Figure 3.

Tunability, in real time, through perceptually relevant control

parameters.

These control parameters should include the azimuth and elevation of each

virtual sound source, as well as descriptors of the room effect, separately for

each source. The perceptual effect of each control parameter should be

predictable and independant of the setting of other parameters. A

measurement and analysis procedure should allow to automatically derive

the settings of all control parameters according to a existing situation.

Configurability according to the reproduction setup and context.

Since there is no single reproduction format that can satisfy all applications,

it should be possible, having specified the desired localization and

reverberation effects, to configure the signal processor in order to allow

reproduction in various different formats over headphones or loudspeakers. This

should include corrections (such as spectral equalization) in order to preserve

the perceived effect, as much as possible, between different setups and

different listening rooms.

Computational efficiency.

The processor should make optimal use of the available computational resources.

It should be possible, considering a particular application where the user or

the designer can accept a loss of flexibility or independance between some

control parameters, to further reduce the overall complexity and cost of the

system, by introducing relevant simplifications in the signal processing and

control architecture. One illustration is the system of Figure 4, where the

late reverberation algorithm is shared between several sources, assuming that

these are located in the same virtual room, while an early reflection module is

associated to each individual sound source.

The Spatialisateur

Espaces Nouveaux and Ircam have developed a virtual acoustics processor, the Spatialisateur, which is built by association of software modules for real-time signal processing and control. The synthesis of localization and room effect cues can be integrated in a single compact processing module, for each source signal. This processor can be configured for various electroacoustic reproduction systems: three-dimensional encoding on two channels for individual listening over headphones or loudspeakers, as well as various multichannel formats intended for small or medium-sized listening rooms and auditoria. Several processors can be associated in parallel in order to process several source signals simultaneously.

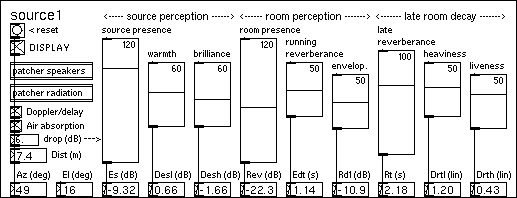

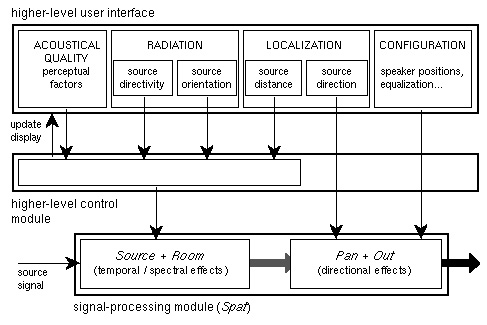

The design approach adopted in the Spatialisateur project focuses on giving the user the possibility of specifying the desired effect from the point of view of the listener, rather than from the point of view of the technological apparatus or physical process which generates that effect. A higher-level user-interface controls the different signal processing sub-modules simultaneously, and allows to specify the reproduced effect, for one source signal, through a set of control parameters whose definitions do not depend on the chosen reproduction system or setup (Figure 5). These parameters include the azimuth and elevation of the virtual sound source, as well as descriptors of the room acoustical quality (or room effect) associated to this sound source.

The room acoustical quality is not controlled through a model of the virtual room's geometry and wall materials, but through a formalism directly related to the perception of the virtual sound source by the listener, described by a small number of mutually independent 'perceptual factors':

User interface: physical vs perceptual approach

The synthesis of a virtual sound scene relies on a description of the positions and orientations of the sound sources and the acoustical characteristics of the space, which is then translated into parameters of a signal processing algorithm such as shown on Figure 3. From a general point of view, the space can be described by a physical and geometrical description, or by attributes describing the perceived acoustical quality associated to each sound source [25]. The first approach typically suggests a three-dimensional graphic user interface representing the geometry of the room and the positions of the sources and listener, and relies on a computer algorithm simulating the propagation of sound in rooms (such as the image source model mentionned earlier). The second approach relies on knowledge of the perception of room acoustical quality, and suggests a graphic interface such as shown on Figure 5, or various types of multidimensional interfaces (as described further in section 4).

A physically-based user interface according to the first approach will not

allow to control directly and effectively the sensation perceived by the

listener [25]. Although localization can be directly controled by a geometric

interface, many aspects of room acoustical quality (such as envelopment or

reverberance) will be affected by a change in the position of the source or the

listener, in ways that are not easily predictable and depend on room geometry

and wall absorption characteristics. On the other hand, adjustments of the room

acoustical quality can only be achieved by modifying these geometry and

absorption parameters, and the effects of such adjustments are often

unpredictable or inexistant. Additionally, a physically-based user interface

will only allow the reproduction of physically realizable situations: source

positions will be constrained by the geometry of the space and, even if the

modelled room is imaginary, the laws of physics will limit the range of

realizable acoustical qualities. For instance, in a room of a given shape,

modifying wall absorption coefficients in order to obtain a longer

reverberation decay will cause an increase in the reverberation level at the

same time.

Figure 5: Higher-level user interface and general structure of

the Spatialisateur (shown for one source signal). The user interface contains

perceptual attributes for tuning the desired effect, as well as configuration

parameters which are set at the beginning of a performance or work session,

according to the reproduction format and setup.

In contrast to a physical approach, a perceptual approach leads to a more intuitive and effective user interface because the control parameters are directly related to audible sensations. This also yields to a more efficient implementation of the control process which dynamicly updates low-level signal processing parameters according to higher-level user interface settings. In a physical approach, the room simulation process which is necessary for updating the arrival time, spectrum and direction of each early reflection whenever the source or the listener move is computationally heavy, unless the room model is restricted to very simple geometries such as rectangular rooms [20, 8].

Finally, a perceptual approach will allow a more efficient implementation of the digital signal processing algorithm itself, i. e. the 'number-crunching' which must be clyclicly performed for each input sound sample in order to merely produce the output sound signals. This 'number-crunching' must be performed whether or not sources move or room parameters are modified, which differs from image synthesis, where computations are necessary only if light sources or reflective objects change in position, shape or color. For spatial sound processing, avoiding to mimic physical phenomena while focusing on the control of perceptual attributes will allow drastic improvements in the computational efficiency of the signal processing algorithms.

Signal processing modules

The Spatialisateur was developed in the Max/FTS object-oriented signal processing software environment [ 26], and is implemented as a Max signal-processing object (named Spat) running in real-time on the Ircam Music Workstation. Spat can also be considered as a library of elementary modules for real-time spatial processing of sounds (panpots, artificial reverberators, parametric equalizers...). This modularity allows one to configure a spatial processor for different applications or with different computational costs, depending on the reproduction format or setup, the desired flexibility in controlling the room effect, and the available digital signal processing resources. As Shown in Figure 5, the Spat processor is formed by cascade association of four configurable sub-modules, namely: Source, Room, Pan, Out. Configuring a Spat module is achieved in a straightforward way by subtituting one version of a sub-module for another.

The Room module is a computationally efficient multi-channel reverberator based on multi-channel feedback delay networks and designed to ensure the necessary naturalness and accuracy for professional audio or virtual reality applications [ 19, 9]. The input signal (assumed devoid of reverberation) can be pre-processed by the Source module, which can include a low-pass filter and a variable delay line to reproduce the air absorption and the Doppler effect, as well as spectral equalizers allowing additional corrections according to the nature of the input signal. The Room module can be broken down to elementary reverberation modules (e. g. an early reflection module or a late reverberation module) which allows building various processing structures, such as those of Figure 1 or Figure 4. The reverberation modules exist in several versions with different numbers of feedback channels, so that computational efficiency can be traded off for time or frequency density of the artificial reverberation [ 18, 19].

The multichannel output of the Room module is directly compatible with reproduction of frontal sounds in the 3/2-stereo format. The directional distribution module Pan can then convert this multi-channel output to various reproduction formats or setups, while allowing to control the perceived direction of the sound event (currently limited to the horizontal plane and the upper hemisphere). It can be configured for two-channel formats, including three-dimensional stereophony (binaural or transaural) over headphones or over a pair of loudspeakers, and the simulation of coincident or non-coincident stereo microphone recordings [9]. Multi-channel formats, appropriate for studios or concert auditoria, allow reproduction over 4 to 8 loudspeakers in various 2-D or 3-D arrangements (the structure of the Pan module can be easily extended to a higher number of channels if necessary).

The reproduced effect is specified perceptually in the higher-level control interface irrespective of the reproduction context, and is, as much as possible, preserved from one reproduction mode or listening room to another. Generally speaking, the Out module can be used as a 'decoder' for adapting the multi-channel output of the Pan module to the geometry or acoustical response of the loudspeaker system or headphones, including spectral and time delay correction of each output channel. In a typical multichannel reproduction setup, this can be used to equalize the direct paths from all loudspeakers to a reference listening poiition. However, in addition to these direct paths, the listening room will provide its own reflections and reverberation, which will also affect the perception by a listener of the sounds delivered to the loudspeakers. To correct the temporal effects of the listening room reverberation, the high-level control processing module includes a new algorithm to perform corrections in the reverberation synthesized by the Room module, so that the perceived effect at a reference listening position be as close as possible to the specification defined by the higher-level user interface. This compensation process allows for instance, under certain restrictive conditions, to simulate the acoustics of a given room in another one, with recorded or live sound sources.

Even in the most computationally demanding reproduction modes, such as binaural and transaural stereophony, a 'high-fidelity' implementation of Spat requires less than 400 multiply-accumulates per sample period at a rate of 48 kHz. This corresponds to less than 20 million operations per second, which can be handled by a single programmable digital signal processor (such as the Motorola DSP56002 or Texas Instruments TMS320C40). It is thus economically feasible to insert a full spatial processor (including both directional panning and reverberation) in each channel of a digital mixing console, by devoting one DSP to each source channel. The mix can be produced in traditional as well as currently developing formats, including conventional stereo, 3/2-stereo, or three-dimensional two-channel stereo (transaural stereo). This increased processing capacity might call for a new generation of user interfaces for studio recording and computer music applications: providing a reduced set of independant perceptual attributes for each virtual source, as discussed in this paper, is a promising approach from the point of view of ergonomy.

Spatial sound processors for virtual reality and multimedia applications (video games, simulation, teleconference, etc...) also rely on a real-time mixing architecture and can benefit substantially from the reproduction of a natural sounding room effect allowing effective control of the subjective distance of sound events. Many of these applications involve the simulation of several sources located in the same virtual room, which allows to reduce the overall signal and control processing cost by using a single late reverberation module (Figure 4). It is possible to further reduce this cost in applications which can accomodate a less refined reproduction and control of the room effect (e. g. in video games or 'augmented reality' applications where an artificial sensation of distance must be controlled, but controlling the room signature is of lesser importance). Binaural reproduction over headphones is particularly well suited to such applications, and can be combined with image synthesis in order to immerse a spectator in a virtual environment. The Spatialisateur is designed to allow remote control through pointing or tracking devices and ensure a high degree of interactivity, with up to 33 Hz localization update rates (fast enough for video synchronization or operation with a head-tracker). An alternative reproduction environment for simulators is a booth equipped with a multichannel loudspeaker system (such as the 'Audiosphere' designed by Espaces Nouveaux). Future directions for research include modeling of individual differences in HRTFs and individual equalization of binaural recordings, as well as improved methods for multichannel reproduction over a wide listening area.

Live computer music performances and architectural acoustics

The perceptual approach adopted in the Spatialisateur project allows the composer to immediately take spatial effects into account at the early stages of the composition process, without referring to a particular electro-acoustical apparatus or performing space. Executing the spatial processing in real time during the concert performance allows specific corrections according to a particular reproduction setup and context. Localization effects, now traditionally manipulated in electro-acoustic music, can thus be more reliably preserved from one performance to another. Spatial reverberation processing allows more convincing illusions of remotely located virtual sound sources and helps concealing the acoustic signature of the loudspeakers, for a wider listening area. It thus allows to achieve a better continuity between live sources and synthetic or pre-recorded signals, which is a significant issue e. g. in the field of computer music [27, 25].

Consequently, a computer music work need not be written for a specific number of loudspeakers in a specific geometrical arrangement. As an illustration, consider an electroacoustic music piece composed in a personal studio equipped with four loudspeakers. Rather than producing a four-channel mix to be used in all subsequent concert performances, a score describing all spatial effects applied to each sound source can be stored using a musical sequencer software. By reconfiguring the signal processing structure (i. e. substituting adequate versions of the Pan and Out modules), an adequate mix can then be produced for a concert performance over 8 channels, or a transaural stereo recording preserving three-dimensional effects in domestic playback over two loudspeakers.

The Spatialisateur can be used for designing an electro-acoustic system allowing to modify the acoustical quality of an existing room, for sound reinforcement or reverberation enhancement, with live sources or pre-recorded signals. For relatively large audience areas (e. g. large concert halls or multipurpose halls), the signal processing structure can be re-configured specifically for a particular situation (by connecting sub-modules of Spat), according to a division of the audience and/or the stage area into adjacent zones, in order to ensure effective control of the perceptual attributes related to the direct sound and the early reflections, for all listeners.

Musical and multidimensional interfaces

Spatial attributes of sounds can thus be manipulated as natural extensions of the musical language. The availability of perceptually relevant attributes for describing the room effect can encourage the composer to manipulate room acoustical quality, in addition to the localization of sound events, as a musical parameter [28, 25]. In one approach (initiated by Georges Bloch in 1993 using an early Spatialisateur prototype), the spatial processor's score can be recorded in successive "passes". During each pass, manipulations of spatial attributes of one or several sound sources can be added to the score and monitored in real-time simultaneously with previously stored effects.

This is similar to operating an automation system in a mixing console, although allowing to manipulate room acoustical parameters which are not available in traditional mixing console automation systems. In this approach, it is important that the control parameters be mutually independent, i. e. that the manipulation of a spatial attribute may not destroy or modify the perceived effect of previously stored manipulations of other spatial attributes (except possibly in extreme or obviously predictible cases: for instance, suppressing the room presence will make adjustments of the late reverberance imperceptible). For operational efficiency, it is also important that the perceived effect of each parameter be predictible, particularly when it is desired to edit the score or write it directly without real-time monitoring. As discussed in this paper, such modes of operation would be much more difficult in a physically-based approach, or with current mixing architectures and reverberation units.

Beside a sequencing or automation process, another approach for creating simultaneous variations of several spatial attributes for one or several virtual sound sources consists of defining a mapping from a graphic or gestual interface to the multidimensional representation defined by a set of perceptually-relevant control parameters: azimuth and/or elevation, together with a set of perceptual factors of the room acoustical quality. A simple example was included in the higher-level interface of the Spatialisateur in order to allow straightforward connection to a bidimensional localization control interface delivering polar coordinates (azimuth an distance): the 'distance' control is mapped logarithmicly to the 'source presence' perceptual factor (the 'drop' parameter defines the drop of presence in dB for a doubling of the distance, i. e. setting 'drop' to 6 dB emulates the natural attenuation of a sound with distance). This provides a simple and effective way of connecting the Spatialisateur to graphic or gestual interfaces, in order to create three-dimensional sound trajectories on various reproduction formats or setups, or draw a map of a virtual sound scene with several sources at different positions in the horizontal plane around the listener.

This mapping principle can of course be implemented in many ways, with various types of multidimensional interfaces, allowing a wide variety of creative effects. Because of the nature of the multidimensional scaling analysis process which led to the definition of the perceptual factors [23, 24], these can be considered as coordinates in an orthonormal basis, allowing to define a norm (in a mathematical sense) for measuring the perceptual dissimilarity between several acoustical qualities. It follows that linear weighting along one perceptual factor or a set of perceptual factors provides a general and perceptually relevant method for interpolating among different acoustical qualities [25]. For instance, it allows implementing a gradual and natural-sounding transition from the sensation of listening to a singer from 20 meters away on the balcony of an opera house to the sensation of being 3 meters behind the singer in a cathedral (based possibly on acoustical impulse response measurements made in two existing spaces), without having to implement an arguable geometrical and physical 'morphing' process between the two situations.

This opens onto research on new multidimensional interfaces for music and audio components of virtual reality in various fields. An additional direction of research is the extension of the perceptual control formalism to spaces such as small rooms, chambers, corridors or outer spaces. In the current implementation of the Spatialisateur, such spaces can be dealt with by manipulating, in addition to the higher-level perceptual factors, some lower-level processing parameters available in the user interface of the Room module.

[2] D. Begault: 3-D Sound for virtual reality and multimedia; Academic Press,

1994.

[3] M. Cohen, E. Wenzel: The design of multidimensional sound interfaces;

Technical Report 95-1-004, Human Interface Laboratory, Univ. of Aizu, 1995.

[4] M. Gerzon: Ambisonics in multichannel broadcasting and video; J. Audio

Engineering Society, vol. 33, no. 11, 1985.

[5] J. Chowning: The simulation of moving sound sources; J. Audio Engineering

Society, vol. 19, no. 1, 1971.

[6] G. Thiele: The new sound format '3/2-stereo'; Proc. 94th Conv. Audio

Engineering Society, preprint 3550a, 1993.

[7] A. Persterer: A very high performance digital audio processing system;

Proc. 13th International Conf. on Acoustics (Belgrade), 1989.

[8] S. Foster, E. M. Wenzel, R. M. Taylor: Real-time synthesis of complex

acoustic environments; Proc. IEEE Workshop on Applications of Digital Signal

Processing to Audio and Acoustics, 1991.

[9] J.-M. Jot, V. Larcher, O. Warusfel: Digital signal processing issues in the

context of binaural and transaural stereophony; Proc. 98th Conv. Audio

Engineering Society, preprint 3980, 1995.

[10] D. H. Cooper, J. L. Bauck: Prospects for transaural recording; J. Audio

Engineering Society, Vol. 37, no. 1/2, 1989.

[11] M. A. Casey., W. G. Gardner, S. Basu: Vision steered beam-forming and

transaural rendering for the artificial life interactive video environment

(ALIVE); Proc. 99th Conv. Audio Engineering Society, preprint 4052, 1995.

[12] J. L. Bauck & D. H. Cooper: Generalized transaural stereo; Proc. 93rd

Conv. Audio Engineering Society, preprint 3401, 1992.

[13] M. R. Schroeder: Natural-sounding artificial reverberation; J. Audio

Engineering Society, vol. 10, no. 3, 1962.

[14] J. A. Moorer: About this reverberation business; Computer Music Journal,

vol. 3, no. 2, 1979.

[15] J. Stautner, M. Puckette: Designing multi-channel reverberators; Computer

Music Journal, vol. 6, no. 1, 1982.

[16] G. Kendall, W. Martens, D. Freed, D. Ludwig, R. Karstens: Image-model

reverberation from recirculating delays; Proc. 81st Conv. Audio Engineering

Society, preprint 2408, 1986.

[17] D. Griesinger: Practical processors and programs for digital

reverberation; Proc. 7th Audio Engineering Society International Conf.,1989.

[18] J.-M. Jot, A. Chaigne: Digital delay networks for designing artificial

reverberators; Proc. 90th Conv. Audio Engineering Society, preprint 3030,

1991.

[19] J.-M. Jot, Etude et réalisation d'un spatialisateur de sons par

modèles physiques et perceptifs; Doctoral dissertation,

Télécom Paris, 1992.

[20] F. R. Moore: A general model for spatial processing of sounds; Computer

Music Journal, vol. 7, no. 6, 1983.

[21] W. G. Gardner: Efficient convolution without input-output delay; J. Audio

Engineering Society, vol. 43, no. 3, 1995.

[22] A. Reilly, D. McGrath: Convolution processing for realistic reverberation;

Proc. 98th Conv. Audio Engineering Society, preprint 3977, 1995.

[23] J.-P. Jullien, E. Kahle, S. Winsberg, O. Warusfel: Some results on the

objective and perceptual characterization of room acoustical quality in both

laboratory and real environments; Proc. Institute of Acoustics, Vol. XIV, no.

2, 1992.

[24] J.-P. Jullien: Structured model for the representation and the control of

room acoustical quality; Proc. 15th International Conf. on Acoustics, 1995.

[25] J.-P. Jullien, O. Warusfel: Technologies et perception auditive de

l'espace; Les Cahiers de l'Ircam, vol. 5 "L'Espace", 1994.

[26] M. Puckette: Combining event and signal processing in the Max graphical

programming environment; Computer Music Journal, vol. 15, no. 3, 1991.

[27] O. Warusfel: Etude des paramètres liés à la prise de

son pour les applications d'acoustique virtuelle; Proc. 1rst French Congress on

Acoustics, 1990.

[28] G. Bloch, G. Assayag, O. Warusfel, J.-P. Jullien: Spatializer: from room

acoustics to virtual acoustics; Proc. International Computer Music Conf.,

1992.

____________________________ ____________________________

Server © IRCAM-CGP, 1996-2008 - file updated on .

Serveur © IRCAM-CGP, 1996-2008 - document mis à jour le .